We Aren't Close To Creating A Rapidly Self-Improving AI

The current paradigm will not lead to an overnight superintelligence explosion.

When discussing artificial intelligence, a popular topic is recursive self-improvement. The idea in a nutshell: once an AI figures out how to improve its own intelligence, it might be able to bootstrap itself to a god-like intellect, and become so powerful that it could wipe out humanity. This is sometimes called the AI singularity or a superintelligence explosion. Some even speculate that once an AI is sufficiently advanced to begin the bootstrapping process, it will improve far too quickly for us to react, and become unstoppably intelligent in a very short time (usually described as under a year). This is what people refer to as the fast takeoff scenario.

Recent progress in the field has led some people to fear that a fast takeoff might be around the corner. These fears have led to strong reactions; for example, a call for a moratorium on training models larger than GPT-4, in part due to fears that a larger model could spontaneously manifest self-improvement.

However, at the moment, these fears are unfounded. I argue that an AI with the ability to rapidly self-improve (i.e. one that could suddenly develop god-like abilities and threaten humanity) still requires at least one paradigm-changing breakthrough. My argument leverages an inside-view perspective on the specific ways in which progress in AI has manifested over the past decade.

Summary of my main points:

Using the current approach, we can create AIs with the ability to do any task at the level of the best humans — and some tasks much better. Achieving this requires training on large amounts of high-quality data.

We would like to automatically construct datasets, but we don’t currently have any good approach to doing so. Our AIs are therefore bottlenecked by the ability of humans to construct good datasets; this makes a rapid self-improving ascent to godhood impossible.

To automatically construct a good dataset, we require an actionable understanding of which datapoints are important for learning. This turns out to be incredibly difficult. The field has, thus far, completely failed to make progress on this problem, despite expending significant effort. Cracking it would be a field-changing breakthrough, comparable to transitioning from alchemy to chemistry.

1. Current Methods Will Reach Human-Level On Anything

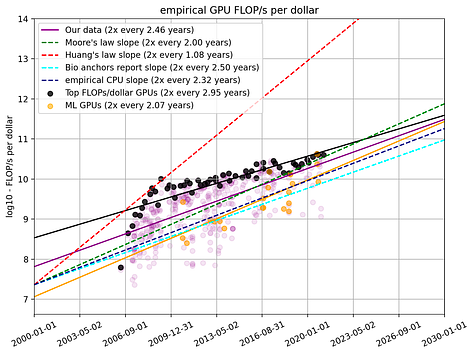

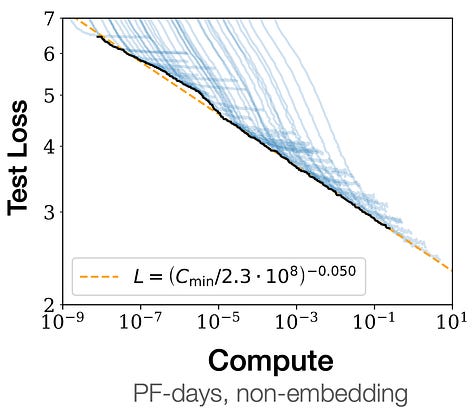

I’ll begin by explaining the way in which AI has made massive progress in recent years. Deep learning, an approach that involves training large neural networks on massive datasets with gradient descent, was discovered in 2012 to outperform all other methods by a large margin. In follow-up work over the subsequent decade, we’ve been able to identify three important trends:

Extrapolating, one must conclude that the performance of deep learning models will continue to improve, at that rate at which this will occur can be approximated by the trend-lines in these plots.

Finally, we must relate performance (e.g. as measured in the above plots by negative-log-likelihood) with capabilities. As performance of a model improves, there is one thing we know for sure will occur: it will make better predictions on data similar to that upon which it has been trained. Currently, it is a completely-reasonable hypothesis that a scaled-up neural network can learn to recognize any pattern that a human might recognize, given that it has been trained on sufficient data.

If the above hypothesis holds, we are already capable (in principle) of creating an AI which is human-level for any set of tasks. All we need is a massive dataset containing examples of expert human behavior on each task, and a model large enough to learn from that dataset. We can then train an AI whose ability is equal to that of the best human on every task in the set. And in fact, it may be more capable — for example, even the best humans may occasionally make careless mistakes, but not the AI.

Already, labs have begun collecting the data needed to train highly capable general agents. This will take time, effort, and resources — it will not happen overnight. But over the next decade, I predict that we will see more and more areas of human activity concretized into deep-learnable forms. In any given domain, once this occurs, automation will not be far behind, as large-scale AIs develop human-level abilities in that domain.

2. We Will Eventually Be Bottlenecked By Our Datasets

Generalization has its limits. Patterns which are not represented in the training data will not be captured by our models. And the more complex a pattern is, the more training data is required for that pattern to be identifiable. For example, as I have pointed out before, GPT-style models trained on just human data will likely never play chess at a vastly superhuman level: the patterns governing the relative value of chess positions are extraordinarily complex, and it is unlikely that a model trained on human gameplay alone will be able to generalize to vastly superhuman gameplay. More generally, the point here is that the model’s abilities are bottlenecked by the dataset-construction abilities of the humans who trained it. If the data includes lots of highly relevant expert demonstrations, the model will be highly capable; but if the dataset is terrible, the model will generally be terrible also.1

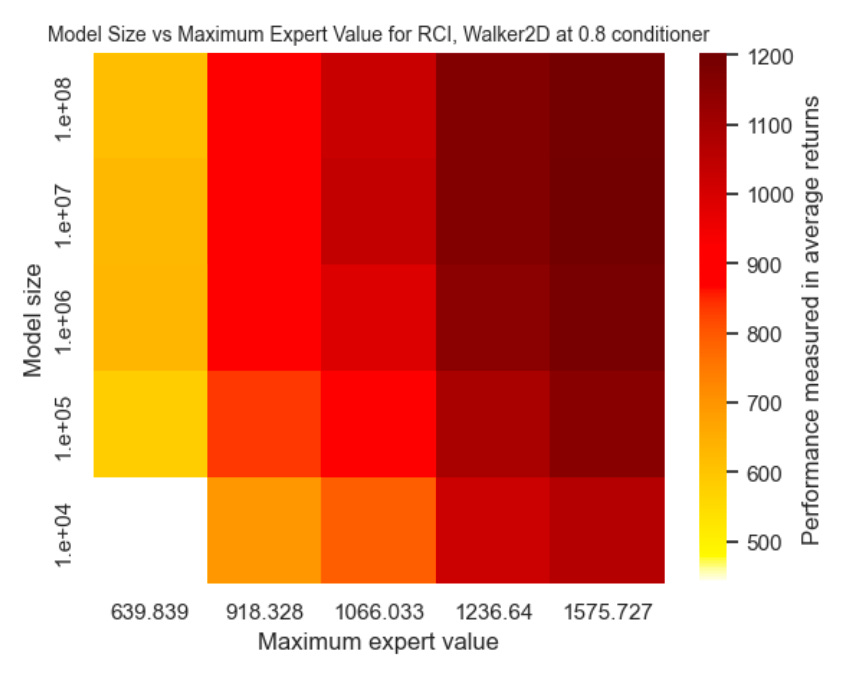

There are superhuman deep learning chess AIs, like AlphaZero; crucially, these models acquire their abilities by self-play, not passive imitation. It is the process of interleaving learning and interaction that allows the AI’s abilities to grow indefinitely. The AI needs the ability to try things out for itself, so that it can see the outcomes of its actions and correct its own mistakes. In so doing, it can catch mistakes made by expert humans, and thereby surpass them.

The study of neural networks which interact and collect their own data is called active deep learning or deep reinforcement learning. This is a different paradigm than that under which GPT-3 and GPT-4 models were trained, which I will call passive deep learning. Passive learning keeps the data-collection and model-training phases separate: the human decides on the training data, and then the model learns from it. But in active/reinforcement learning, the model itself is “in the loop”. New data is collected on the basis of what the model currently understands, and so in principle, this could allow the model to ultra-efficiently collect the precise datapoints that allow it to fill in holes in its knowledge.

Data is to a deep-learning-based agent what code is to a good old-fashioned AI, so active/reinforcement learning agents are recursively self-improving programs, and it’s worth seriously considering whether there is any risk that an active learning agent could undergo a fast takeoff.

Fortunately, at the moment, there isn’t: algorithms for active learning with neural networks are uniformly terrible. Collecting data using any current active-learning algorithm is little better flailing around randomly. We get a superintelligence explosion only if the model can collect its own data more efficiently than humans can create datasets. Today, this is still far from true.

3. Active Learning Is Hard & Fundamental

To recap: a fast-takeoff scenario requires an AI that is both able to learn from data and choose what data to collect. We’ve certainly seen massive progress on the former, but I claim that we’ve seen almost no progress on the latter.

Thanks to publication bias, it’s always a bit hard to measure non-progress, but by reading between the lines a bit, we can fill in some gaps. First, note that most of the recent impressive advances in capabilities — DALL-E/Imagen/Stable Diffusion, GPT-3/Bard/Chinchilla/Llama, etc. — have used the same approach: namely, passive learning on a massive human-curated Internet-scraped dataset. This should raise some eyebrows, because the absence of active learning is unlikely to be an oversight: many of the labs behind these models (e.g. DeepMind) are quite familiar with RL, have invested in it heavily in the past, and certainly would have tried to leverage it here. The absence of RL is conspicuous.

The sole examples that break this pattern are chatbot products like ChatGPT/Claude/GPT-4, which advertise an “RLHF fine-tuning” phase as being important to their value. This gives a great window into the efficacy of these methods. Does RLHF increase capabilities? Let’s take a look at the GPT-4 techincal report:

The model’s capabilities on exams appear to stem primarily from the pre-training process and are not significantly affected by RLHF. On multiple choice questions, both the base GPT-4 model and the RLHF model perform equally well on average across the exams we tested.

OpenAI didn’t release concrete numbers around how much human feedback was collected, but given the typical scope of OpenAI’s operations, it’s probably safe to assume that this was the largest-scale human-data-collection endeavor of all time. Despite this, they saw negligible improvements in capabilities.2 This is consistent with my claim that current active learning algorithms are too weak to lead to a fast takeoff.

Another negative-space in which we can find insight is in the stagnation of the subfield of deep reinforcement learning. In ~2018, there was a wave of AI hype centered around DRL: we saw AlphaZero and OpenAI 5 beating human pros at Go and Dota, self-driving cars being trained to drive, robotic hands doing grasping, etc. But the influx of impressive demos began to slow to a crawl, and in the past five years, the hype has fizzled out almost completely. The impressive abilities of DRL turned out to be restricted to a very small set of situations: tasks that could be reliably and cheaply simulated, such that we could collect an enormous amount of interactions very fast. This is consistent with my claim that active learning algorithms are weak, because in precisely these situations, we can compensate for the fact that active learning is inefficient by cheaply collecting vastly more data. But most useful real-world tasks do not have this property — including many of those required for general superintelligence.

Although the empirical picture I have painted is not a rosy one, that evidence alone may not be convincing. To complete the picture, I want to give a high-level explanation of why active learning is so difficult.

Firstly, let’s understand our goal. A good active learning algorithm is one that lets us learn efficiently: it prioritizes collecting datapoints that tell our model a lot of new information about the world, and avoids collecting datapoints that are redundant with what we have already seen. It comes down to understanding that not all datapoints are equally valuable, and having an algorithm to assess which new data will be the most valuable to add to any given dataset.

It is clear that to do this, we need to deeply understand the relationship between our data, our learning algorithm, and our predictions. We need to know: if we added some particular data point, how would our predictions change? On which inputs would we become more (or less) confident? In other words: how would our model generalize from this new information?

Understanding neural network generalization has long been the most important & challenging unsolved problem in the field. Large neural networks reliably generalize far beyond their training data, but nobody has any idea why, or any way of predicting where or when this generalization will occur. Empirical approaches have been no more successful that theoretical ones. This is a deep and fundamental problem at the very heart of the field, and it will be a massively important breakthrough when somebody solves it.

Once we recognize that efficient active learning is as hard as understanding generalization, it stops being surprising that active learning is difficult and unsolved, since all problems that require understanding generalization are difficult and unsolved (another such problem is preventing adversarial examples). Also, they aren’t getting more-solved over time: we’ve made little-to-no progress on any problem of this sort in the last decade, certainly not the reliable improvements of the sort we’ve seen from supervised learning. This indicates that a breakthrough is needed — and that it is unlikely to be close.

Something that would change my mind on this is if I saw real progress on any problem that is as hard as understanding generalization, e.g. if we were able to train large networks without adversarial examples.

Many thanks to Richard Ngo, David Krueger, Alexey Guzey, and Joel Einbinder for their feedback on drafts of this post.

As it turns out, it is actually possible to construct tasks where learning to imitate a dataset of terrible play can lead to a good agent (especially using a conditional-imitation method, essentially prompting the agent to play well). But it’s just as easy to construct tasks where this definitely won’t happen, so this isn’t a reliable way to get to good performance. In other words: we can guarantee that we will do as well as the best experts; we sometimes might do better but can’t guarantee that we will. Most real-world tasks of note seem to be of the latter type. For an intuitive example: consider a chess agent learning from a dataset of complete amateurs, where nobody castles or takes en-passant. The agent could never even learn that those moves are legal, let alone learn to play at a superhuman level.

Yes, RLHF was arguably somewhat useful in other ways; but right now we are focused on the question of whether active/reinforcement learning could lead to a “fast takeoff”, and it is capabilities that are relevant for that question.

Overnight? Very probably not.. But few futurists are actually suggesting it'll happen "overnight" in the literal sense. They're often speaking comparatively, to progress/advancements of the past.

The thing about technological progress over time, is humans are rather poor at differentiating between linear progress, and so called "exponential progress".. Which while trickier to understand, tends to better describe the way some technologies enable greater advancements more rapidly than before, than thinking about progress in an easy linear way, and up until recently, there wasn't much functional difference in the pace of change between the two.

Someday, imo likely soon, with one of the next iterations of GPT or some other LLM.. Someone will figure out the right combination of prompting loop-back methods, or the right way to use a team of AIs with various API access, and they'll start automating portions of a business, then someone will use it to automate the management of an entire business. And if it generates more profit than human managers, we'll rapidly see entire industries begin automating using autonomous ai agents. Unless we change the profit motives, it's an obvious chain of events.. And all it takes is the right industries to automate, before it becomes a self-improving feedback loop. Again, that process doesn't happen overnight, but after looking back on a year's worth of progress, it'll be shocking how much happens.

You make some very interesting points! The whole idea that the data is going to be a bottleneck made me think. As you said, we need to either get bigger better datasets from somewhere, or figure out which datapoints are actually important for learning and focus on those. I wonder if that second problem might produce some solutions that spill over into our ability to teach things to humans as well.